‘I should have got a stronger grip on her,’ wrote Lord Montagu in a letter home from his sickbed in Malta in 1916, after being rescued from the wreckage of the SS Persia which was hit by a German torpedo while crossing the Mediterranean.

But to his enduring pain, Eleanor Thornton, his travelling companion, personal assistant and beloved mistress, had not been saved.

Love at first sight: Eleanor Thornton and Lord Montagu. “Theirs was a great love affair. Although when he came back home he was badly injured, he spent days looking for Thorn, who had been thrown overboard, searching everywhere, hoping that somehow she would turn up.”

Of course, she never did. But though the affair between the aristocrat and Eleanor Thornton ended with her death, their love was immortalised in the most unlikely of places.

It was the inspiration for the Rolls-Royce flying lady, or ‘Spirit of Ecstasy’, whose soaring curves are modelled on Thorn and recognised by motorists across the world as a symbol of quality and distinction.

Montagu’s wife, Lady Cecil, not only knew about the affair, but condoned it. For her part, Eleanor had a child by Montagu but, knowing that as a single mother she would be unable to continue to work for Montagu, gave her daughter up for adoption.

Born in Stockwell in 1880 to a Spanish mother and an Australian engineer, Eleanor Velasco Thornton left school at 16 and went to work at the Automobile Club (now the RAC).Through her work, she met all the motoring pioneers of the day, among them John Scott Montagu.

Montagu was a charismatic figure, educated at Eton and Oxford, with a great interest in travel and transport.An MP for the New Forest Division of Hampshire, he was a great car enthusiast, who came third in the Paris-Ostend road race in 1899 and is credited with introducing King Edward VII to motoring.

The love affair of Eleanor Thornton and Lord Montagu was the inspiration for the ‘Spirit of Ecstasy’

But he was also married to Lady Cecil ‘Cis’ Kerr, with whom he already had a daughter. When he met Miss Thornton, however, the effect was instantaneous.

“I fell in love with her at first sight,” he later said. “But as I couldn’t marry her I felt I must keep away from her as much as I could. But she began to like me and realise my feelings as well.”

In 1902, when Eleanor was 22 and Montagu 36, she went to work as his assistant on Britain’s first motoring magazine, Car Illustrated, in an office on London’s Shaftesbury Avenue.

He explained: “Before long, we discovered we loved each other intensely and our scruples vanished before our great love.”

It was a love whose light never went out. When Montagu’s father died in 1905, John Scott inherited the title, becoming the second Baron Montagu of Beaulieu, and moved from the House of Commons to the Lords.

Miss Thornton was still very much on the scene, increasing her duties as his assistant accordingly. Montagu owned a Rolls-Royce and would often take her for a spin along with Charles Sykes, an artist and sculptor. This is how Thorn came to inspire, and model for, the Spirit of Ecstasy.

Montagu was friends with the managing director of Rolls-Royce and between them they cooked up a plan for an official sculpture, which at Montagu’s suggestion Charles Sykes was commissioned to design.

Sykes used Miss Thornton as a model and The Spirit of Ecstasy, or “Miss Thornton in her nightie”, as those in the know called it, graced its first Rolls-Royce in 1911.

Whether by this time Lady Cecil had worked out the truth about the relationship between her husband and his vibrant personal assistant is not clear, but by 1915, when Montagu had to leave for India to be Adviser on Mechanical Transport Services to the government of India, she certainly did.

It had been decided that Miss Thornton would accompany Montagu onboard the SS Persia. Before the trip, Miss Thornton corresponded with Lady Cecil. Her tone is tender and conspiratorial. “I think it will be best for me to make arrangements without telling Lord Montagu – so he cannot raise objections,” she writes.

Later in the letter she writes, tellingly: “It is kind of you to give your sanction to my going as far as Port Said. You will have the satisfaction of knowing that as far as human help can avail he will be looked after.”

According to Montagu’s biographer, the family felt that Lady Cecil “became resigned, with no feelings of bitterness to her husband’s affair and took the view that if he had to take a mistress then it was as well he had chosen someone as sweet-natured as Eleanor Thornton – rather than someone who might cause a scandal.”

But their days on the SS Persia would be the last Montagu and Thornton spent together. They boarded the ship in Marseille on Christmas Day in 1915. Five days later, on December 30, they were sitting at a table having lunch when a German U-boat fired a torpedo at the ship’s hull.

The massive blast was repeated as one of the ship’s boiler’s exploded. As the ship began to list, icy seawater rushed in through the open port holes, and in the mayhem, Montagu and Eleanor made for the decks, which were already beginning to split.

They considered trying to find a lifeboat but there was no time. One moment, Montagu had Eleanor in his arms, the next they were hit by a wall of water and she was gone. The port side of the ship was submerged within minutes and Montagu was dragged down with it. He was wearing an inflatable waistcoat and this, along with an underwater explosion that thrust him to the surface, probably saved his life.

“I saw a dreadful scene of struggling human beings,” he later cabled home. “Nearly all the boats were smashed. After a desperate struggle, I climbed on to a broken boat with 28 Lascars (Eastern sailors) and three other Europeans. “Our number was reduced to 19 the following day and only 11 remained by the next, the rest having died from exposure and injuries.”

They were eventually rescued, after 32 hours at sea with no food or water, by steamship Ningchow. Montagu convalesced in Malta, then returned home where he was flattered to read his own obituary, written by Lord Northcliffe, in the Times.

The accident left him physically frail, but for years Montagu continued to search for his beloved Thorn. He also erected a memorial plaque in Beaulieu parish church beside the family pew, giving thanks for his own

“miraculous escape from drowning” and “in memory of Eleanor Velasco Thornton who served him devotedly for 15 years” – an extraordinary public display of feeling under the circumstances.

Lady Cecil died in 1919 and Montagu remarried the following year, to Pearl Crake whom he met in the South of France.

She bore him a son, Edward, who is now the Third Baron Montagu of Beaulieu. But the repercussions of the love affair did not end with the deaths of the two women involved.

The current Lord Montagu takes up the story. “My father died in 1929, when I was two and that was when the family discovered, by reading his will, that Eleanor had had a child.

“The will made provision for her, but was worded to obscure who she was. We always used to wonder who she was and were keen to find her.

“Then my half-sister Elizabeth went to live in Devon. She was standing in a fishmongers queue one day when someone said to her: ‘See that woman over there? She’s your sister’.”

The woman’s name was Joan. She was born in 1903, soon after Montagu and Thornton began their affair, and given up for adoption straight away. The curious thing was that while Eleanor made no attempt to contact her daughter, Montagu had, on occasion, met up with her.

He also wrote her a letter explaining the circumstances of her birth – “Your mother was the most wonderful and lovable woman I have ever met… if she loved me as few women love, I equally loved her as few men love…” – that she did not receive until after his death.

Joan’s behaviour was as discreet as her mother’s. She had attended her father’s funeral, but so quietly no one even noticed she was there.

Says the current Lord Montagu: “Eventually, I got in touch and took her for lunch at the Ritz. We had oysters and she said: ‘Your father always used to bring me here and we would have oysters, too.”

Joan married a surgeon commander in the Royal Navy and had two sons, one of whom, by sheer chance, worked for Rolls-Royce.

Lord Montagu did as he knew his father would have wished. “I recognised them as full family,” he says, apologising for the tears on his cheeks as he recounts the moving story.

And so, a century after Eleanor Thornton and John Montagu met, their story has now passed into history.

But the spirit of their feelings lives on, in the form of the figurine that still graces every Rolls-Royce.

abridged version of The Great Rolls Royce Love Story,

printed in the Daily Mail,1 May 2008

You’ll please forgive this rather random introduction. It was the above story (not the Daily Mail version midn you) in the Buckler’s Hard Maritime Museum (in one small corner of a much larger museum), discovered while on on holiday two weeks ago, that peaked my interest. [Not just mine either – you can find out much more at https://sspersia1915.wordpress.com/]

The SS Persia sank on was sunk off Crete, while the passengers were having lunch, on 30 December 1915, by German U-Boat commander Max Valentiner (commanding U-38). Persia sank in five to ten minutes, killing 343 of the 519 aboard.

The story of Lord Montague, his love affair, his inflatable waistcoat that had been purchased in advance of the trip and likely saved his life (the actual jacket is in the museum), as well as the trip of a British Steamer through openly hostile waters of the Mediterranean and the U-Boat Commander’s apparent disregard for civilian lives struck me as fascinating.

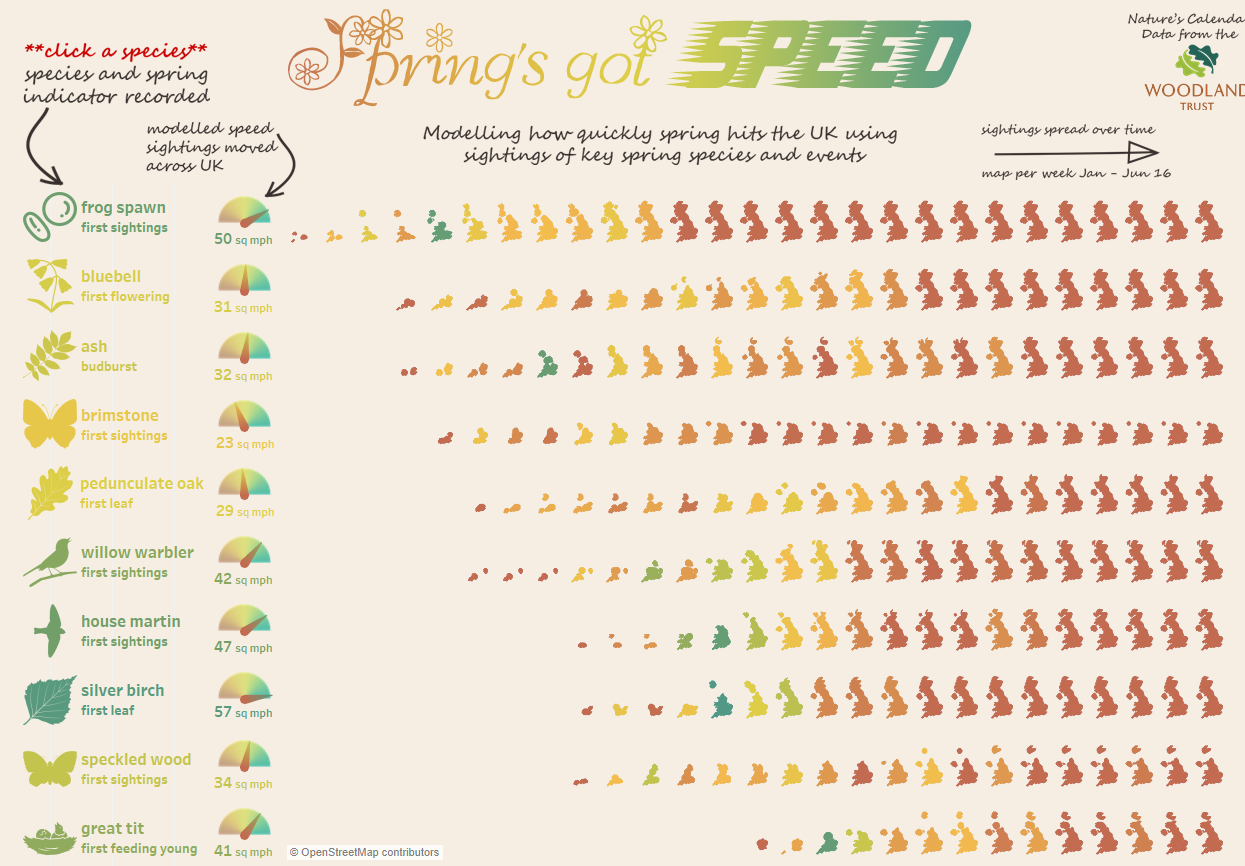

Having long held a fascination with U-Boats when I was deciding on a theme for my Water Iron Visualisation I decided to research U-Boats of World War 1 in more detail. You can see my visualisation below (click the picture to explore).

Data Gathering

Looking for data I found uboat.net, this amazing site lists every ship attacked by U-Boats in both World Wars and also plots the location of the majority of them where know. It also includes details of the attacking U-Boats, their commanders as well as the fate of the U-Boat itself. It’s a literal tour de force and I spent a long time perusing the site.

I have difficult time with the morals of scraping data from third-party sites, clearly someone has put time into collecting this data and it’s theirs to share but I decided to use the data under the principles of fair use. I already have access to the data, made a whole copy, I am using the data for computational analysis and study and my work will increase interest to the site (well I certainly hope it will). I have also attributed the data. let me know your thoughts on a fairly grey area that I (and others) struggle with.

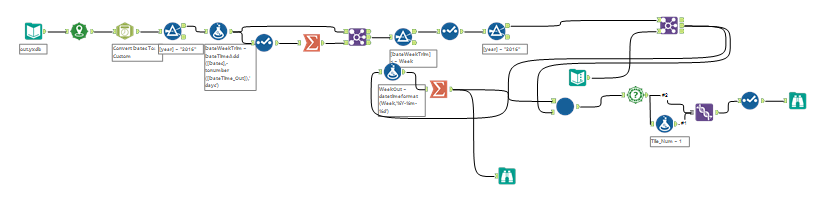

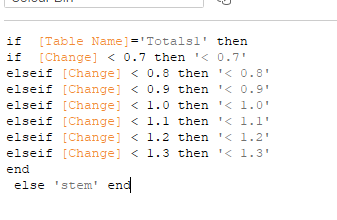

I used Alteryx Download tool to download the data

This looks complex but actually the initial starting point was just half of the first row of tools to download the ships hit. As I built the visualisation I added other segments that downloaded details of the U-Boats, their fates and then parsed out their sinking locations from the notes using Reg Ex, as well as joining on Ocean areas geographically. Each task was a discrete item consisting of a few tools after I’d spent time with the data set in Tableau building and analysing.

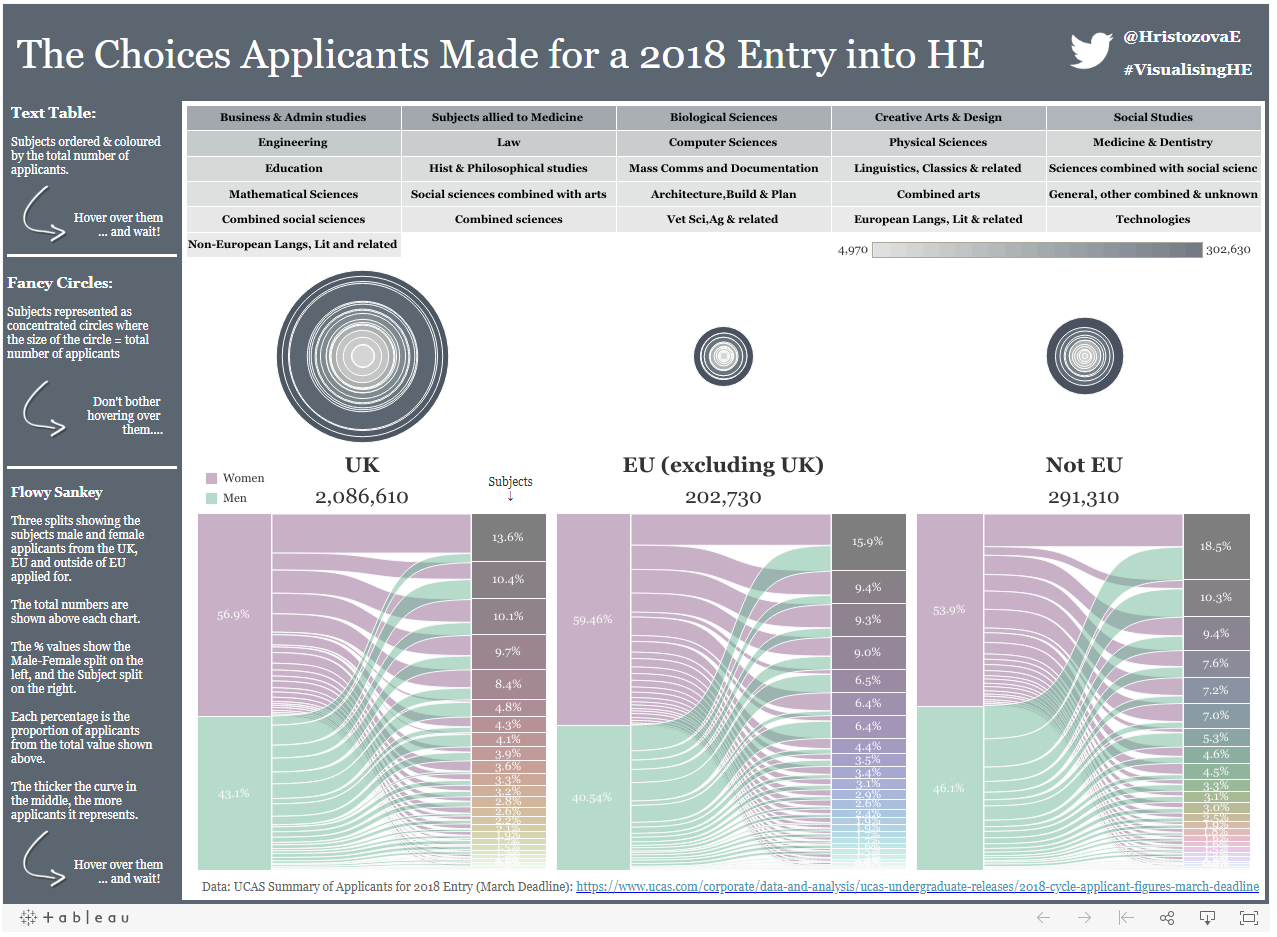

This back and forth workflow is key to the way I work and I talk about it a lot, I make no apologies for that though as it really is essential to the way I think and work. I had a few draft design ideas in my head but I never sketch things out or plan them ahead of time – I prefer to let the data guide my hand and eye, eventually using the analysis and visualisations I create to inform the final viz and design. I created 40 separate visualisations as part of this project and then stepped through them looking for stories in an effort to weave together a narrative.

Inevitably there are elements it’s frustrating to miss out, you’ll find no mention of the U-Boat commanders with the highest number of kills in the final viz for example – partly I didn’t want to glorify the horrific crimes they committed but partly they just didn’t weave into a coherent and structured story.

Design Decisions

Long form? Story-points? Wide form? Single page? I toyed with a lot of these in my head but eventually I opted for story-points as a way to tell the story. It sounds quite obvious but the decision was hard purely because of the design limitations story-points places on me as an author. The height and position of the story points for example can’t be controlled, nor can graphics be added outside the dashboard area. You’re also forced into a horizontal / left – right set of story points. All these felt like limitations that restricted me but with hindsight they probably made my life easier from a design standpoint and stopped me worrying about large aspects of the design.

My second difficult decision was how to entwine other charts into the visualisation and story. I built the map fairly quickly and knew that would be the centre piece of the visualisation but wasn’t sure how to bring other charts in without affecting that beautiful look Mapbox had helped me achieve with the map. I’m not sure I’m 100% happy with the result but by keeping colour schemes similar and also using a lot of annotations in both (and keeping the consistent top-left annotation) I think I just about got away with what I was trying to achieve. This consistency was a key factor at play in designing the whole visualisation.

Data Visualisation and Analysis

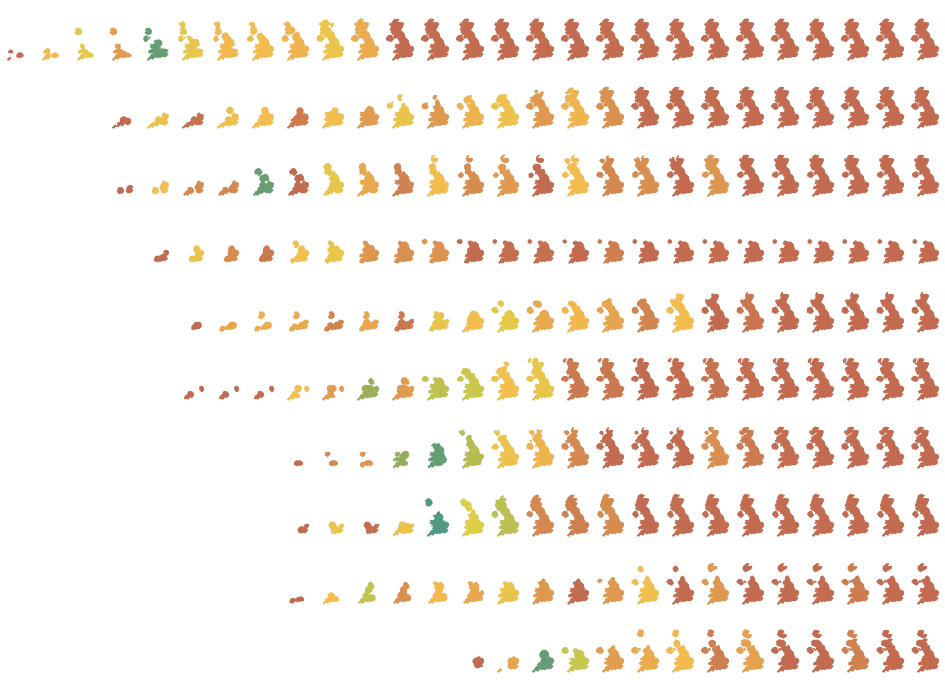

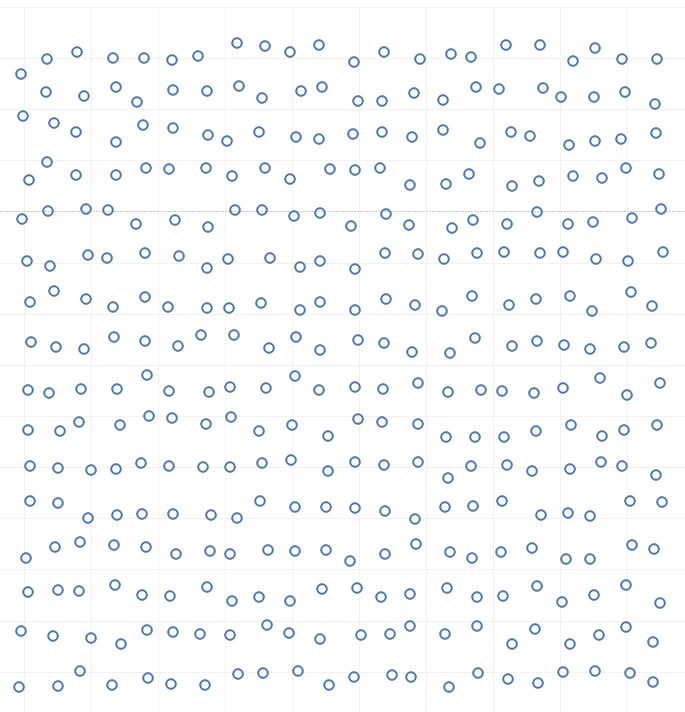

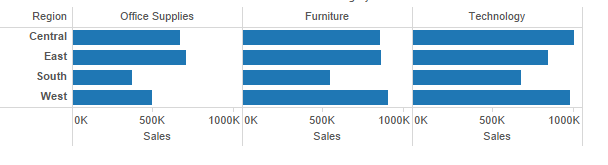

From a visualisation perspective the biggest think I struggled with was the occlusion of the attacks on the map. My original larger points offered a good differential to see the different tonnage involved but the smaller points, second image below, offers less occlusion thus giving more visibility of the true density. Playing with opacity wasn’t an option (see below) and so I opted for the smaller points in the end despite them being a touch harder to see.

Would the new Tableau Density Mark type have helped:

In this case no I don’t think it would have – I prefer to show every single attack and sinking rather than obscuring them into an amorphous blob. Density mapping works to tell me where the main theatres of war were but doesn’t help pull out the main attacks and help the story in my opinion. Likewise, the two combined is even worse – yuck (perhaps improving the colours here may help).

So for those dying to see the new Density Mark feature, as with everything in data visualisation, my advice would be it’s best used sparingly and for the right use case.

Other data visualisation matters were in the use of the rolling averages and rolling sums – how understandable is a rolling sum of U-Boats sunk, it allows a useful comparison measure when there are low numbers (much more than an average) but takes some understanding. Here I opted to include the visualisation with some explanation.

Storytelling

Storytelling was to be a huge focus of the visualisation and a key to pulling the whole thing together. A timeline of the war was to be the structure and I was keen not to deviate from that – using data to illustrate points I’d pulled from research (with limited time it’s hard not to focus on just one source but I pulled several together and actually ignored some claims that were made in some source as I couldn’t justify them with the data I had).

I wanted the timeline to focus on the human and political aspects of the visualisation, as well as some the famous, and not so famous stories. I also wanted to get across the full scale and horror of these attacks – 1000’s dying trapped in a sinking ship under a storm can sometimes get lost as an emotionless point on the map. These aims were not easy to bring together, especially in a limited time-frame and I’m most disappointing about the last point – I feel I’ve not truly got across the nature of those deaths and the true horror of the sinkings. That said I feel it’s very hard to achieve in visualisations without falling into cliched visualisation types, all of which have been done before, and so rather than go down that rabbit hole I stuck to telling the stories in the limited space I had and trust the user to imagine the horror.

Balance between the text, the visualisations and keeping the length of the story relatively short was another difficult aspect. Again I’m relatively happy with the result but I had to sacrifice a lot of the detail and miss out interesting stories and acts of heroism in an effort to keep it short (including my original inspiration above). I’ve no real advice here for others except that it is a constant juggling act and something you’ll never be truly happy with.

Wish list

I thought I’d finish with a list of things in Tableau that I found hard, or harder than they should be. Spending just 24 hours on data collection and visualisation of this scope is testimony to the geniuses who build Tableau and Alteryx but there’s always ways it could be improved – here were some of my pain points and how I overcame them.

Borders on Shapes – I wanted to keep the U-Boats sinkings a different shape from the attacks (circles) for obvious reasons, but the result was the circles lost their border….not ideal! For aesthetic and analytical reasons I think the border adds a lot.

The workaround I applied was to duplicate the data and add a tiny portion to the size of the shadow data point, then colour the two differently. I then ordered the points in the detail pane by an ID so the shadow appeared directly below the mark and no other marks were between them. Each “mark” in the viz was actually two, one real and then the shadow below it.

The workaround I applied was to duplicate the data and add a tiny portion to the size of the shadow data point, then colour the two differently. I then ordered the points in the detail pane by an ID so the shadow appeared directly below the mark and no other marks were between them. Each “mark” in the viz was actually two, one real and then the shadow below it.

The real colour legend below shows what I had to do here. This was a reasonable amount of effort to work out and then removing the shadows for aggregations elsewhere was a pain (I could have used two data sources I guess).

Of course the result was I couldn’t change the opacity either.

MapBox – I had to add my own bathymetry polygons to the North Star map as their version of the bathymetry rasters didn’t work about Zoom Level 3, I needed to zoom out further! This took a lot of faffing in map box studio (probably because of my inexperience).

Annotations – please, please, please can annotations be easier in Tableau, losing them every time I add a dimension to the visualisation is painful. Not to mention using them with pages in Storypoints seems to be slightly hit and miss – at one point I lost all of them, whether it was my fault or not I’m not sure but it was a painful experience.

Storypoints – as an infrequent user of Storypoints I generally found them easy to use, however I look forward to the day I can customise and move the buttons as much as I can style the rest of my visualisation

Alteryx – I’d love some easy web page parsing adding to be able to select patterns in HTML and pull them out – I do this so often! I’m a dab hand now at (.*?) in regex but I’d love there to be more.

Iron Viz – I go on holiday for two weeks every August for two weeks, please consider using a different time for the feeder to reduce my stress levels when I return 😀

Thanks for reading – I’d love to hear your critique of my efforts in the visualisation, what worked what didn’t. Also consider giving it a “favourite” in Tableau Public – I’d appreciate it. Here’s the link again: https://public.tableau.com/profile/chrisluv#!/vizhome/TheU-BoatinWorldWarIAVisualHistory/U-BoatsinWW1

The workaround I applied was to duplicate the data and add a tiny portion to the size of the shadow data point, then colour the two differently. I then ordered the points in the detail pane by an ID so the shadow appeared directly below the mark and no other marks were between them. Each “mark” in the viz was actually two, one real and then the shadow below it.

The workaround I applied was to duplicate the data and add a tiny portion to the size of the shadow data point, then colour the two differently. I then ordered the points in the detail pane by an ID so the shadow appeared directly below the mark and no other marks were between them. Each “mark” in the viz was actually two, one real and then the shadow below it.