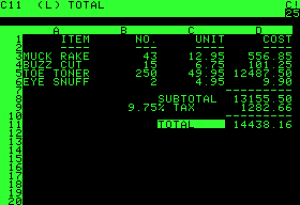

Excel is much maligned. One reason is it’s so easy to make mistakes; take the recent news that Tibco investors “lost” $100 million dollars due to a spreadsheet mistake, or the story last year that there was a mistake in the Excel calculation of a 2010 paper which has been widely cited to justify budget-cutting and austerity measures across the US and Europe.

The reason these mistakes were even possible is because spreadsheets are so easy to use they fall out of the usual testing procedures applied to other business critical applications. The people using Excel are not skilled developers, they have no development background and so checks are not simply missed, they are not even considered.

Now, as I see the take up of BI Analytics tools like Tableau, Alteryx and their brethren accelerate and start to become locally ubiquitous I feel it is important to step back and issue a warning:

Excel, Tableau, Alteryx and other similar tools are development tools, and as such should be given the same standards in quality assurance and documentation as any other development tool / project.

Now when I write development tool I mean that when you are writing an Alteryx workflow, or a Tableau visualisation, or an Excel spreadsheet, you are writing a program – a set of instructions for the software to interpret. In C# you do this by writing lines of code, in Tableau you do this by dragging pills and placing them on panes, in Alteryx by dragging an configuring tools on the canvas and in Excel by using formulae in cells. Regardless of how you get there this set of instructions needs documenting and checking.

In days gone by all “programming” was done by skilled developers who were trained in unit tests, user acceptance testing, and other disciplines with Quality Assurance teams who would have dedicated resource to find bugs and issues. Step forward 5 years and those same routines and reports are now being written and promoted by business users with no formal development testing.

Could we be in a position in 5 or 10 years from now where we see headlines such as:

“Tableau mistake costs manufacturer $500 million”

I doubt it, neither Alteryx nor Tableau suffer from the horrible obfuscation that nested a nested VLOOKUP can bring, but I am making a series point that needs considering during any BI deployment driven by the business.

Solutions

As a BI consultant I’ve been asked about this testing problem many times, and so below I set out the common practices I’ve employed as I’ve led BI deployments as a consumer, and later as a consultant.

Purple People

IT has already learned the lessons of testing and has many best practices and methodologies laid out to catch and deal with these issues. Most of these best practices can be simply applied to BI in the business universe but they need experienced leaders from the IT world, who understand the business problems to help implement and maintain them.

IT has already learned the lessons of testing and has many best practices and methodologies laid out to catch and deal with these issues. Most of these best practices can be simply applied to BI in the business universe but they need experienced leaders from the IT world, who understand the business problems to help implement and maintain them.

Purple people are the solution – if they come dressed as this guy then ideal – but regardless they should have skills from IT but with an understanding of the business world, bringing the red of IT and mashing it with the blue of business. Employing a few purple people when recruiting a BI team can bring many advantages but the rigour of process they can bring from the IT world can really help drive testing and quality assurance.

Peer Review

My first manager and mentor Simon Hamm instilled this practice in me and I will never forget it, over the years it’s caught many mistakes in teams and projects I have run. It is simple and can be applied to any BI workflow or dashboard.

The premise:

Nothing leaves the team until it has been checked by a peer, that person is responsible for finding the issues you will inevitably have made.

Peer review should be the cornerstone of the business, it should apply to everyone and the assumption during checking should be that there are errors to find. So Senior members of the team are not exempt from errors (often they are the worse culprits as they take on pressure work and publish it quickly).

in the corporate world implementing a peer review policy can also be backed up by personal objectives, instilling an ethos of checking rather than a culture of blame, e.g. replace an objective saying “In the next 6 months your reports should produce no errors” with “Everything you produce should be reviewed by a peer”. People make mistakes, removing the blame increases efficiency and moral and shifts the onus onto ensuring the policy designed to catch those mistakes works.

Smoke Testing

Fact checking and other simple functional tests should be part and parcel of the early part of any report / data testing process. Producing a dashboard on the number of people in the UK, then check the top line numbers looks right. It’s often said the devil is in the detail but it’s important that the broad numbers are checked first – I’ve seen situations where reports that have been “checked extensively” have basic headline figures with glaring mistakes, which have been missed because of the focus on the detail – I imagine this could have been the issue with the Tibco numbers.

Replicate

This sits firmly alongside peer review, as replicating the results of the module / workbook should the mainstay of the checking process and are the responsibility of the peer “checker”. Thankfully tools like Tableau and Alteryx (and other rapid development BI tools) make this easy. Checking a Tableau report? Use Alteryx to do some adhoc analysis and check the numbers. Checking an Alteryx workflow? Drop the data into Tableau and do some visual checks.

Hand crank a few rows of data, say for an individual or product, through the entire process – are the results what you’d expect? Checking a few rows is much simpler than checking 10 million.

Trend Analysis

Ensure processes track MoM and YoY trends; small data quality issues can be difficult to pick up and will only manifest themselves over time. Keeping headline QA (Quality Assurance) figures of key datasets can help track these trends and pick out issues with data processing.

Unit Testing

Modular workflows like Alteryx are easy to build but in when building them people need to ensure that checks and balances are built into the logic; tools like Message and Test can be used to build simple checks – e.g. are joins 100%? Are there duplicate records? Build in outputs at each of these key stages and ensure these row level error logs are checked if they contain data. Without these checks modules can run unattended for a long time before anyone notices key lookup tables haven’t been updated and data dropped during the process.

User Acceptance Testing

With rapid development BI comes a whole new paradigm, UAT can and should be done in an agile and flexible way. Often business users are building their own reports but even if not then co-locating individuals can lead to a much better experience for both parties.

Documentation, Documentation, Documentation

Just do it! Documentation doesn’t have to be dry and Word / Visio based though.

Annotate requirements in the tool itself (both Alteryx and Tableau provide a rich set of tools to allow users to do this as they build workflows and dashboards, and other similar tools have similar features). Comment formula and use visual workflows to provide commentary on the analysis and decisions. Hide and disable dead ends / investigations but don’t delete them – they are as useful as the finished result as they show the development process.

Also document the checking processes: released a report with an error? Learn from it. Keep a diary of checks for each dataset to refer back to, there’s nothing worse than a mistake that’s then repeated needlessly later.

Conclusion

Spiderman was once told: “With great power comes great responsibility” and that’s never been more relevant than to the new users picking up the BI tools of tomorrow. Throwing away 30+ years of software development lessons would be a shame, it’s important those lessons grow and change along with the tools.

Pingback: Best of Alteryx on the Web – November 2014 | Sciolistic Ramblings